Documentation

Demo account: http://scms.icybcluster.org.ua/demo/

Login: demo

Password: cluster

Nowadays everyone cares about user-friendly program interfaces. This trend is equally important for all kinds of software. However, common interfaces for user access to HPC systems and HPC system administration are not so friendly in fact. Besides knowledge of field-specific software tools the user is supposed to know purely technical details about cluster OS, job submission, compilers, runtime environments, etc.

Development of grid technologies does not rescue the situation, because grid is just one more level of complexity that requires knowledge of grid command line tools, new job submission syntax, inter-cluster compatibility of runtime environments and so on.

Thus, the problem of HPC system maintenance remains a difficult and time-consuming task.

SCMS.pro is an attempt to provide an all-inclusive solution for both user access and administrative tasks. The integrated solution in a form of web portal for cluster management system allows users to easily control their task flow without a need to study numerous details of a supercomputer or grid operational environment. A convenient service for cluster administration provides means for daily routines of monitoring, user management, error reporting, etc. The system supports wide range of hardware and software cluster architectures.

Our project is implemented and successfully used on grid-clusters at Institute of Cybernetics, Institute for Low Temperature Physics and Engineering, Institute for Scintillation Materials of NAS of Ukraine, and also in a number of other academic institutions of Ukrainian National Grid.

Features

SCMS.pro main features include:

- Installation on virtually any supercomputer with resource managers SLURM, Torque, etc.

- Web-based application for supercomputer access. Platform independent interface (Windows/Unix/Mac) working in all major browsers: IE, Firefox, Opera, Chrome, and Safari. Extensive use of Web 2.0 and Ajax technologies.

- Intuitive multilingual GUI designed for both beginners and more experienced users. The system requires studying minimum of documentation.

- Support of ARC (NorduGrid). gLite and Unicore middleware support is in progress.

- Transparent operation in grid, similar to operation on a local cluster, allows the user to quickly move from cluster to grid with unified UI across heterogeneous grid clusters.

- Detailed reports about cluster usage statistics.

- Quick access to critical information about supercomputer operation. Detailed cluster status reports.

- Urgent alert messages about critical errors via SMS/E-mail.

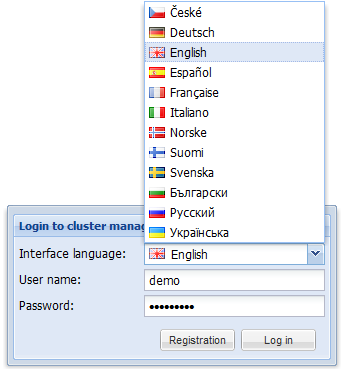

Login and Registration Forms

Access to SCMS.pro is performed by usual SSH account login and password. You can also select desired interface language.

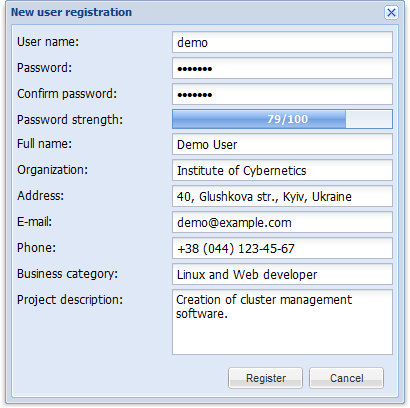

To obtain cluster account you should fill in the registration form. Form fields are equipped with live validity checks. Registration request is sent to cluster administration for approval.

User Interface

The majority of scientists work with shelf software packages. They need easy and convenient environment for editing input files, parallel programs launch, and online viewing task outputs. Application programmers use cluster as a tool for developing and testing parallel programs. They also need an environment for compilation with support of popular compilers and application-oriented libraries, and source text editor with syntax highlighting.

The interface was designed to perform all usual user operations only by its means. The main operations performed by cluster users are:

- file operations;

- task submission;

- tracking task execution process and viewing task results;

- communication between users and administrators;

- operation in grid.

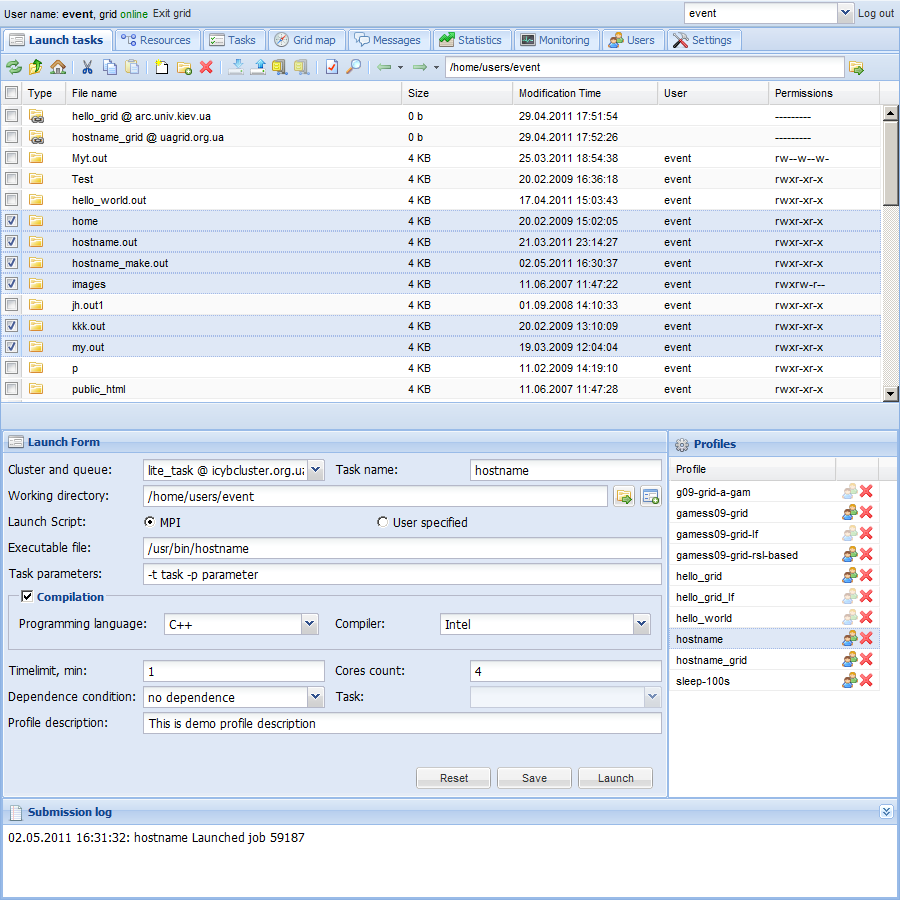

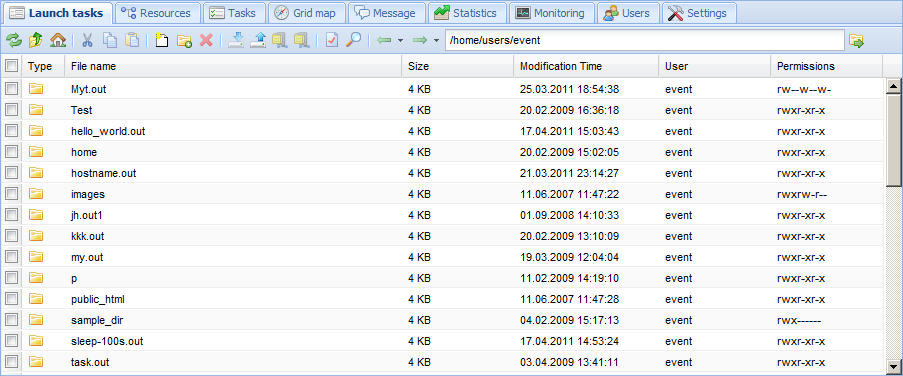

File Management

File Management tool provides usual file operations:

- creation of files and folders;

- cut/copy/paste;

- uploading files from local computer to user folder on cluster;

- downloading files from cluster and grid;

- archiving and extracting of files and folders;

- inline file viewing and editing with syntax highlighting for popular programming languages;

- file tracking similar to tailf;

- changing attributes;

- searching by regular expression and creation time;

- removal, etc.

List of files can be sorted by name, size or creation time.

Operation with grid files is integrated in File Manager.

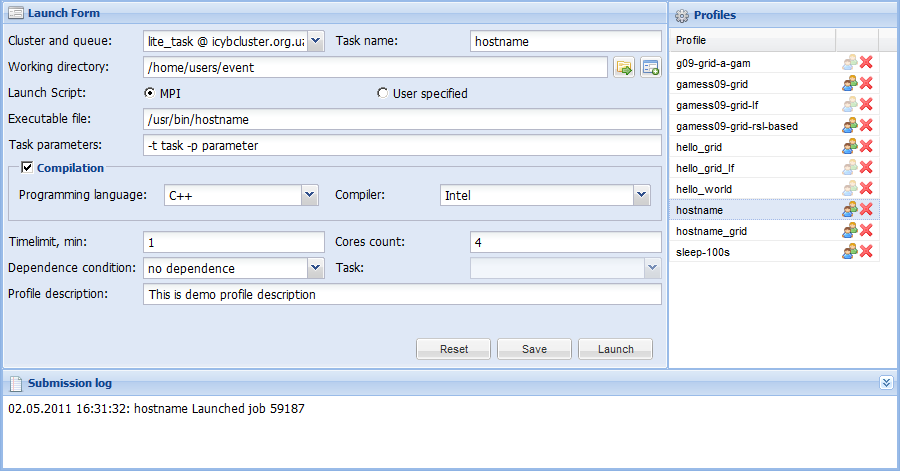

Task Submission to Local Cluster

Task submission to resource manager queues is performed in Launch Form. It allows you to set all necessary parameters of the computing task.

An intelligent system for compilation of source files is provided. It detects programming language and selects corresponding compilation scenario. There is a support of scenarios for Intel and GNU compilers. Scenarios for other compilers and programming languages can be easily added by administrator.

There is a special operation mode for parallel software packages (Gamess, Gromacs, Abinit, etc.). In this mode some launch parameters are automatically filled with package default values considerably simplifying use of such packages.

Task submission parameters can be saved to user profiles for further use. This simplifies routine user operations of launching similar tasks.

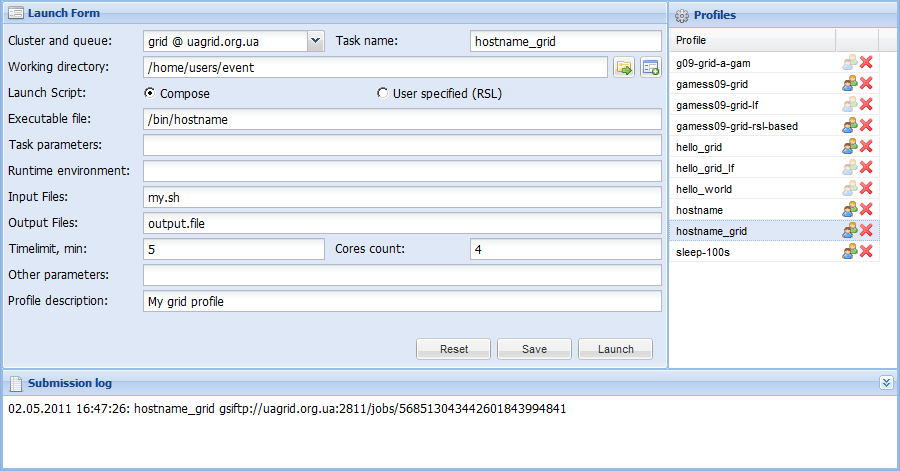

Task Submission to Grid

You should have a valid grid-certificate and a grid-password for full support of grid technologies. Grid task submission procedure is similar to task launch on a local cluster. You should fill in Launch Form or provide a valid xRSL file.

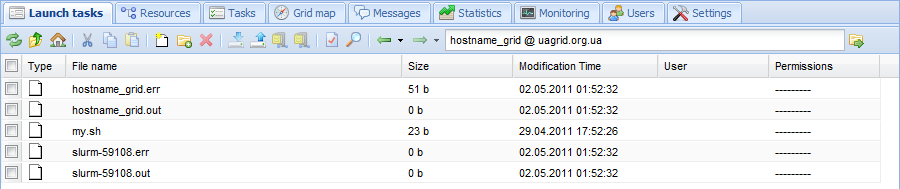

The system constantly monitors grid tasks’ statuses and automatically copies completed tasks’ results to corresponding folder on a local cluster. Task runtime files can be viewed during task execution and copied to local cluster for further use.

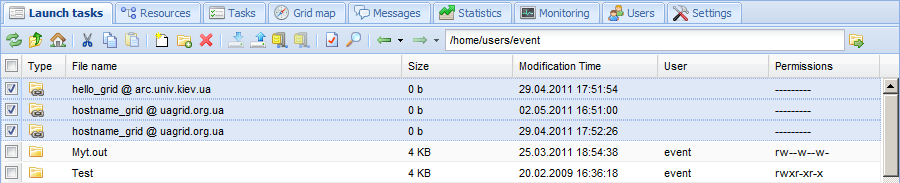

Grid Task Folders

The corresponding remote folder appears in File Manager after successful launch of grid task. Such folders are indicated with chain symbol.

User can perform usual file operations with grid files and folders.

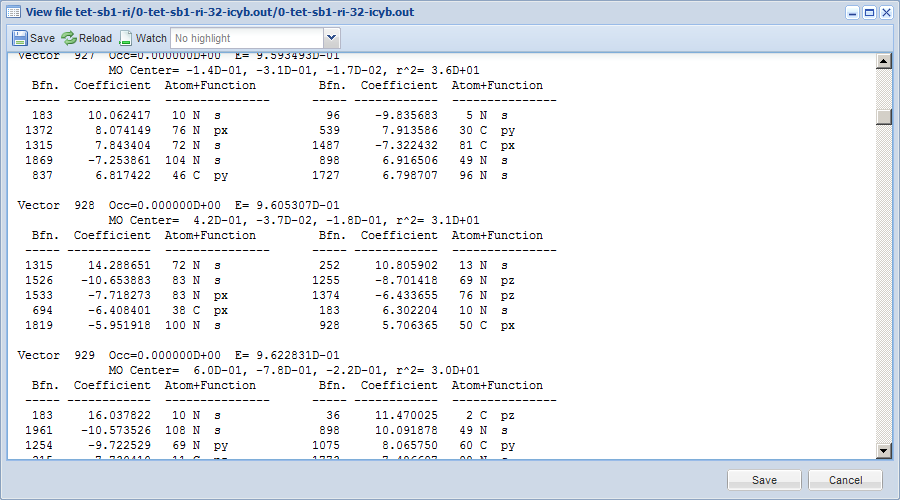

Tracking Task Results

File Manager is equipped with File Viewer with syntax highlighting for popular programming languages. Sometimes it is convenient to follow file changes in real-time with tailf-like editor function.

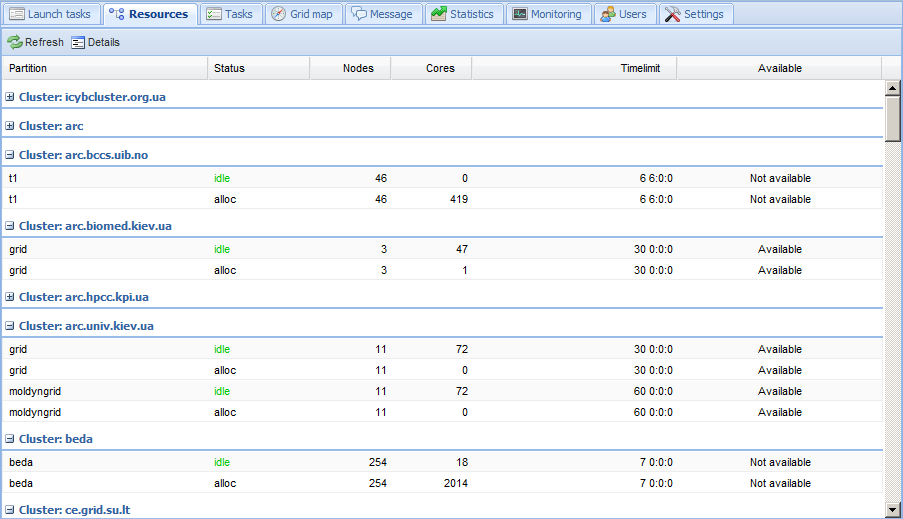

Resources

You can view list of local cluster resources (queues) with their time limits and number of cores available. Grid resources are grouped by cluster hostname. Additional information about selected partition or queue is available in the Details pane.

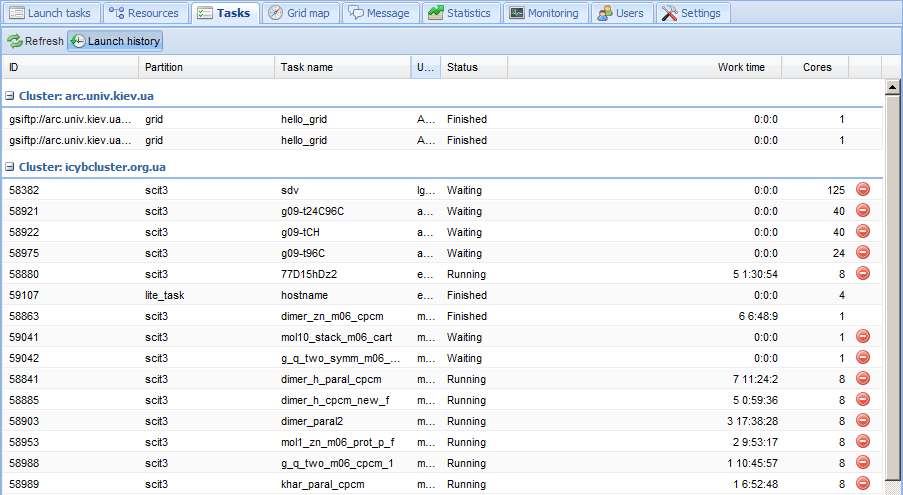

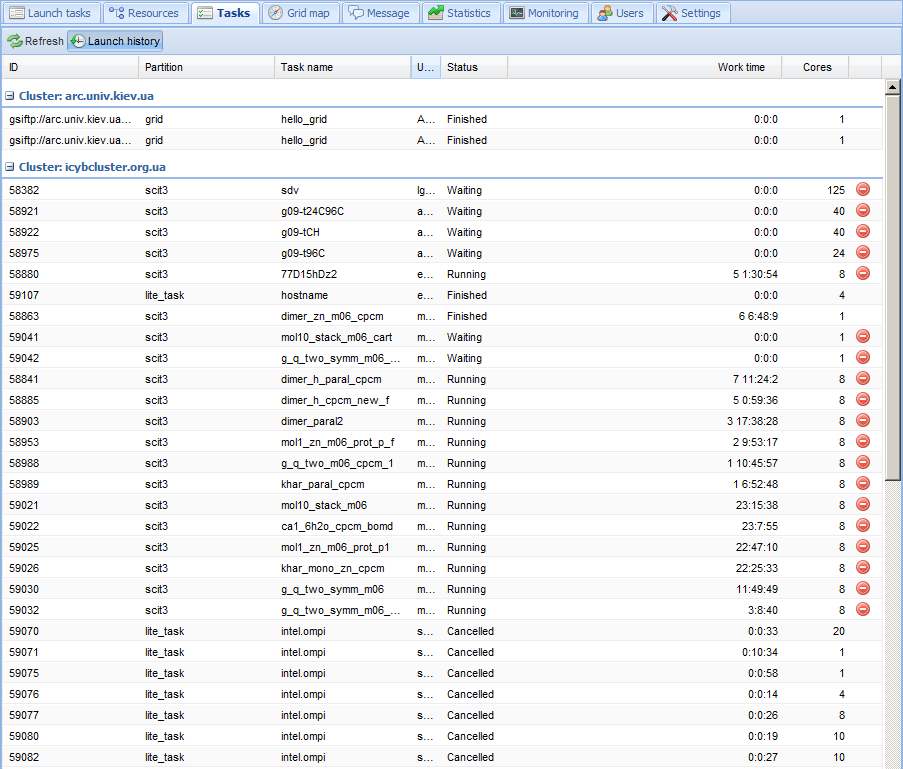

Tasks list

You can view list of all tasks on local cluster and your own grid tasks. You can cancel your own tasks. The “Launch history” mode allows you to view completed tasks. Additional information about selected task is available in the Details pane. For example: list of occupied nodes, submit, start, and end times (for finished tasks only), etc.

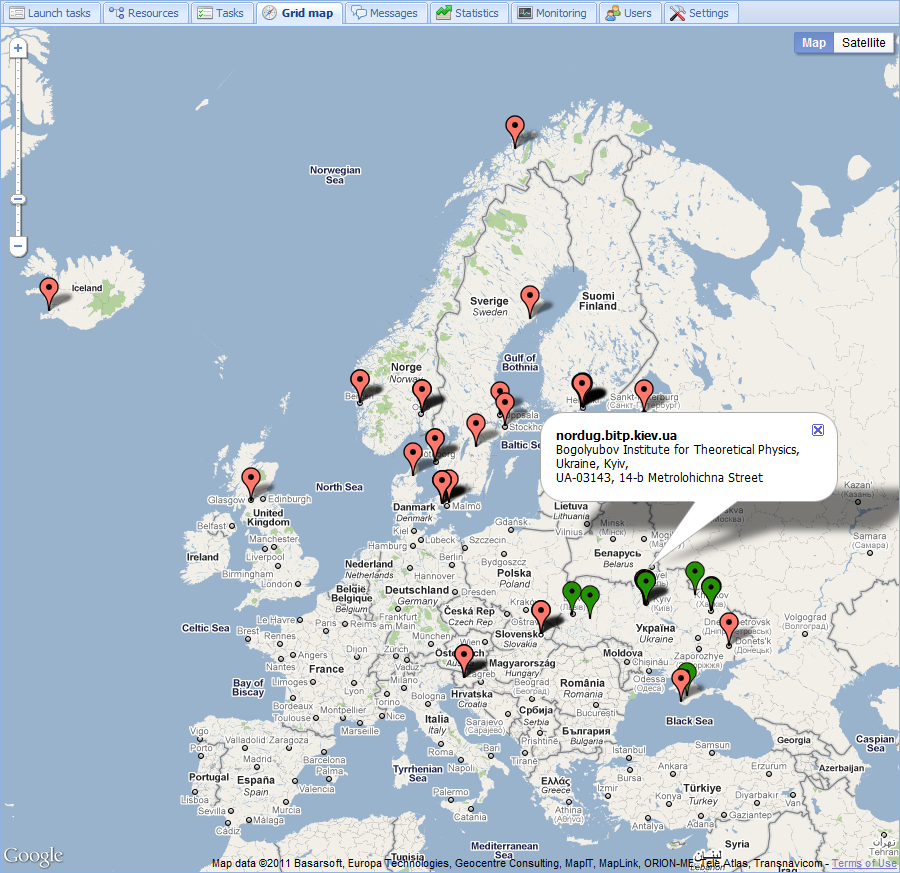

Grid Map

Grid map shows geographic location and availability of grid clusters. Avaliable clusters are marked with green.

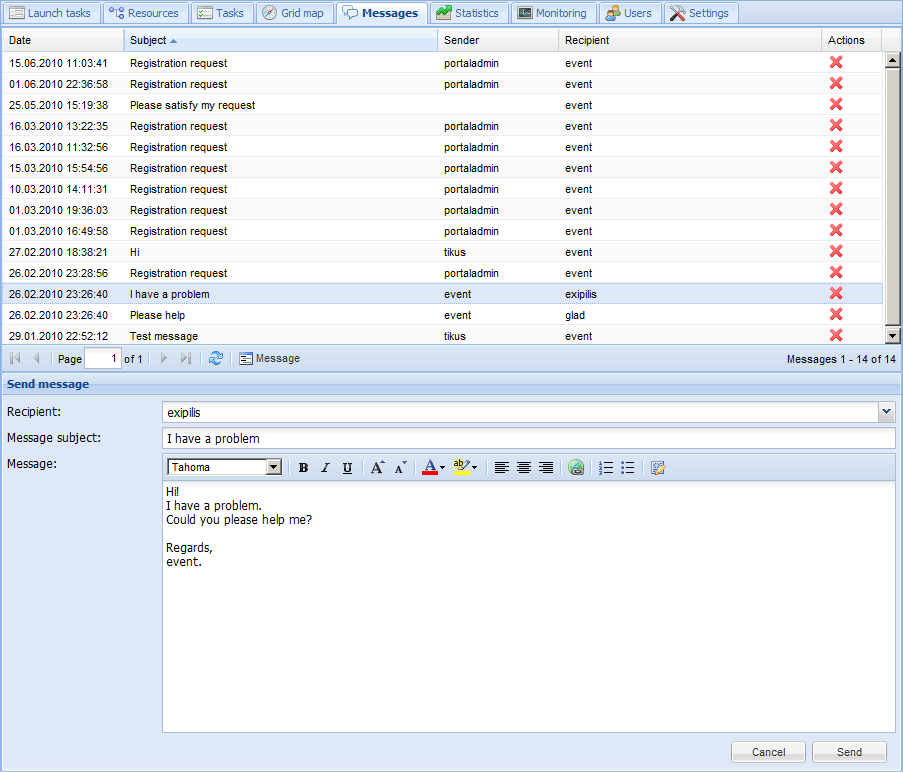

Messaging Service

It is possible to communicate with other users and administrators through built-in messaging. This feature is commonly used to inform cluster administration about troubles with cluster usage.

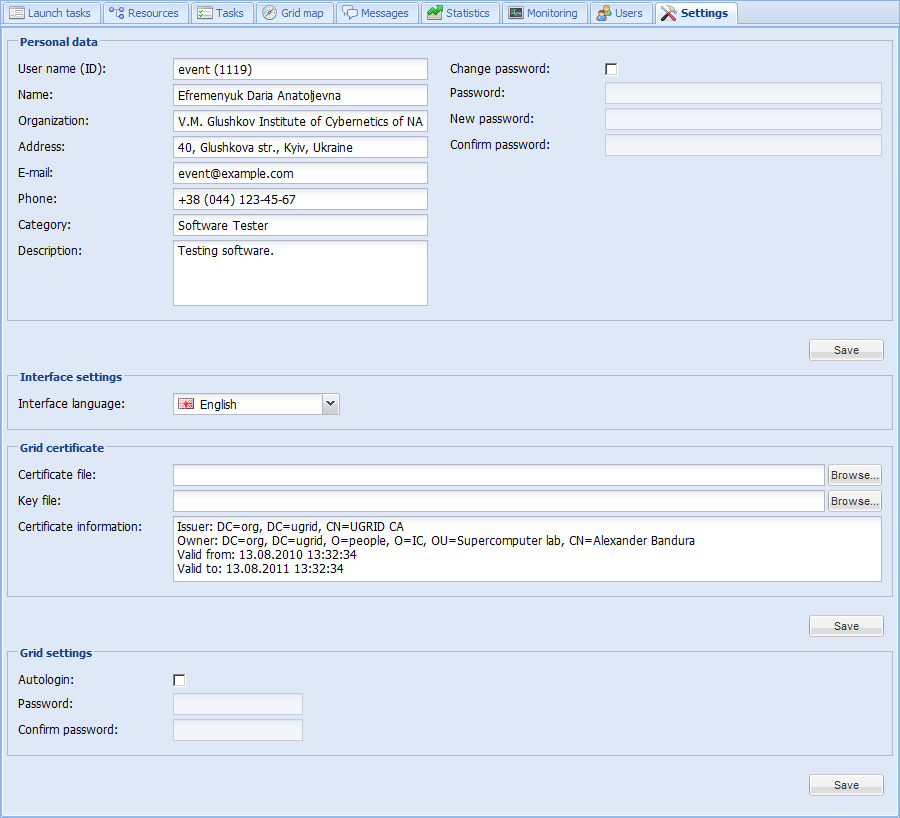

User Settings

Settings tab allows you to change your personal data, interface settings, install grid-certificate, and set up grid login mode.

Administrator Capabilities Overview

Administrators organize computing process on a supercomputer. Administrators have their login accounts like ordinary users but with enhanced features. Main administrator capabilities are:

- cluster resource management: organize nodes in queues, block, reboot, switch on/off, and set drain;

- task queue administration;

- cluster hardware status monitoring;

- user accounts management;

- switching to another user;

- performing diagnostic tasks;

- analyzing resource usage statistics.

Administrators obtain notifications about dangerous situations.

Task Queues Administration

Task queues demand constant supervising. Interface has possibility to view task queues and cancel tasks in case of error or other reasons.

Notification about Dangerous Situations

Noncritical error messages are sent to administrator E-mail. Critical alerts, such as nodes overheating, cooling system or hard disks failure, are sent via SMS.

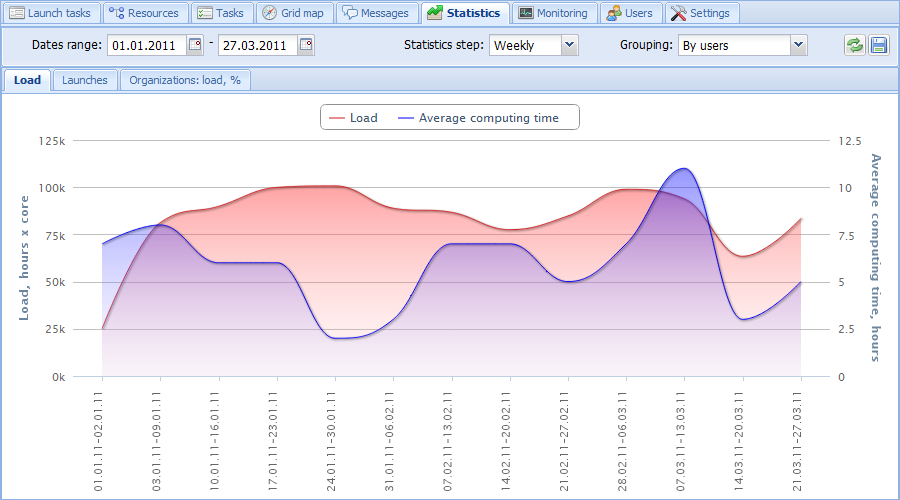

Cluster Usage Statistics

SCMS collects information about completed tasks and reports from monitoring sensors. Resource usage statistics can be grouped by users and organizations. Statistics can be exported to either CSV or Excel format.

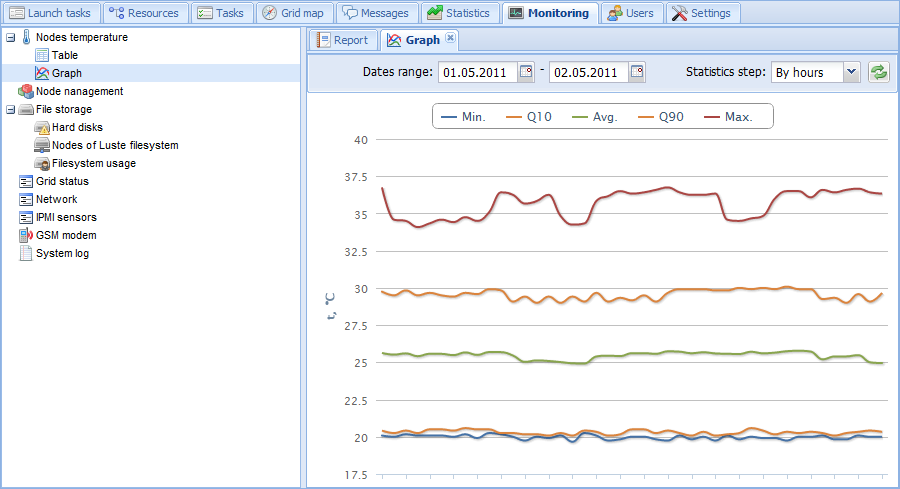

Monitoring

Hardware status requires constant attention of the administrator. Early informing about failures is one of primary goals of the system. The monitoring subsystem has modules for checking hardware status and software components of supercomputer:

- resource manager (SLURM, Torque, etc.);

- error counters of Ethernet and Infiniband network switches;

- hard disks and RAIDs of servers by means of operation system;

- Lustre file system;

- nodes status and temperature by means of IPMI;

- GPU units monitoring (temperature, fan speeds, etc.);

- health status of UPS batteries;

- system log;

- GSM/CDMA modem status;

- grid middleware operability.

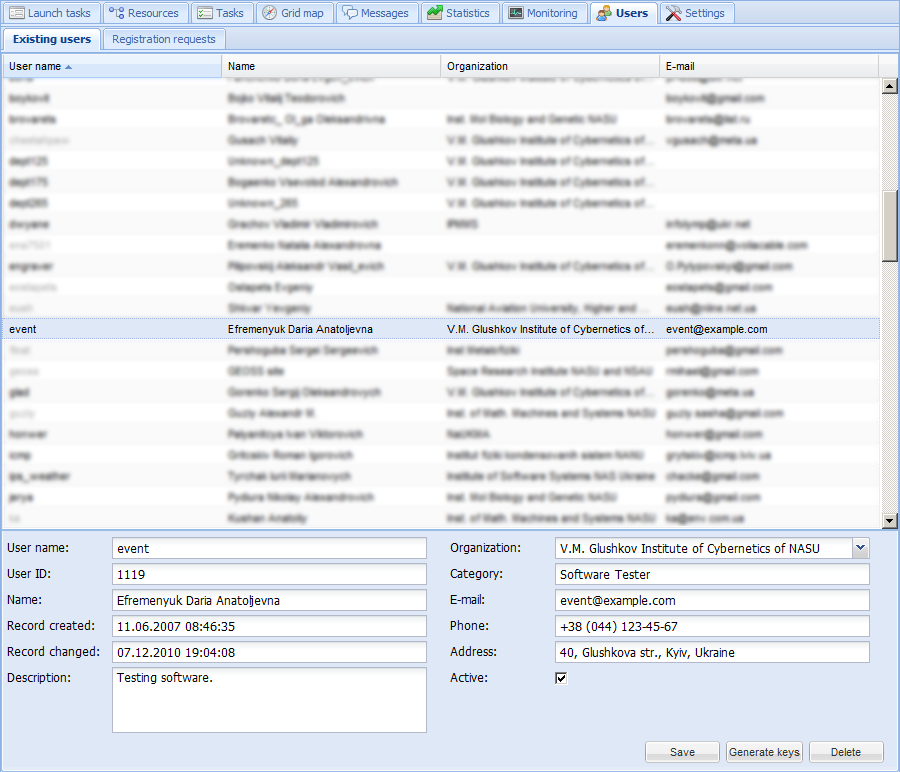

Users Management

Administrator has full range of user accounts management capabilities: user registration request acceptance, editing account data, and user removal. Switching to another user is provided to help administrators to solve users’ difficulties. It allows recreating errors, localizing them in the environment where they occur.

Performing Diagnostic Tasks

Diagnostic tasks are special class of tasks. They allow obtaining cluster performance characteristics, checking up reliability of the whole cluster. These tasks can be launched both by schedule and on demand. An intellectual results analysis with detection of weak components is provided.

Diagnostic tools check nodes productivity, Infiniband network, and Lustre file system health. Special overheating protection unit shuts down nodes with temperature exceeding critical limit.

System log viewer is equipped with keyword filter that simplifies analysis of large volumes of text.

System Tech Specs and Compatibility

The system core consists of middleware scripts for interaction with cluster hardware, resource manager, grid software, etc. Scripts perform all service requests from the user interface, monitoring and diagnostics tools. Data transmission is encrypted using OpenSSL, user has access only to his/her own files and tasks.

- Cluster OS: Linux (CentOS, RedHat, AltLinux, etc).

- The system supports major resource managers: Torque, SLURM, PBS-compatible, etc.

- Supported grid middleware: ARC (NorduGrid). gLite and Unicore middleware support is in progress.

- User accounts: LDAP, /etc/passwd. Authentication of users: LDAP, PAM.

- Temperature and hardware failure sensors: IPMI.

- Web server: Apache, PHP, MySQL.

- GSM/CDMA modem (optional).